Meet William MacAskill, the puerile professor who helps crypto capitalists justify sociopathy today for a universe of transhuman colonization tomorrow. Please share this episode with your friends and start a conversation.

Warning: This podcast occasionally uses spicy language.

For an entertaining deep dive into the theme of season five (Phalse Prophets), read the definitive peer-reviewed taxonomic analysis from our very own Jason Bradford, PhD.

Sources/Links/Notes:

- Andrew Anthony, “William MacAskill: ‘There are 80 trillion people yet to come. They need us to start protecting them’,” The Guardian, August 21, 2022.

- Guiding Principles of the Centre for Effective Altruism

- Peter Singer, “Famine, Affluence and Morality,” givingwhatwecan.org.

- Sarah Pessin, “Political Spiral Logics,” sarahpessin.com.

- Eliezer Yudkowsky, “Pausing AI Developments Isn’t Enough. We Need to Shut it All Down,” Time, March 29, 2023.

- Emile Torres explains the acronym TESCREAL in a Twitter thread.

- Benjamin Todd and William MacAskill, “Is it ever OK to take a harmful job in order to do more good? An in-depth analysis,” 80,000 Hours, March 26, 2023.

- William MacAskill, “The Case for Longtermism,” The New York Times, August 5, 2022.

- Emile P. Torres, “Understanding “longertermism”: Why this suddenly influential philosophy is so toxic,” Salon, August 20, 2022.

- Nick Bostrom, “Existential Risks,” Journal of Evolution and Technology (2002).

- Nick Bostrom, “Astronomical Waste: The Opportunity Cost of Delayed Technological Development,” Utilitas (2003).

- Emile P. Torres, “How Elon Musk sees the future: His bizarre sci-fi visions should concern us all,” Salon, July 17, 2022.

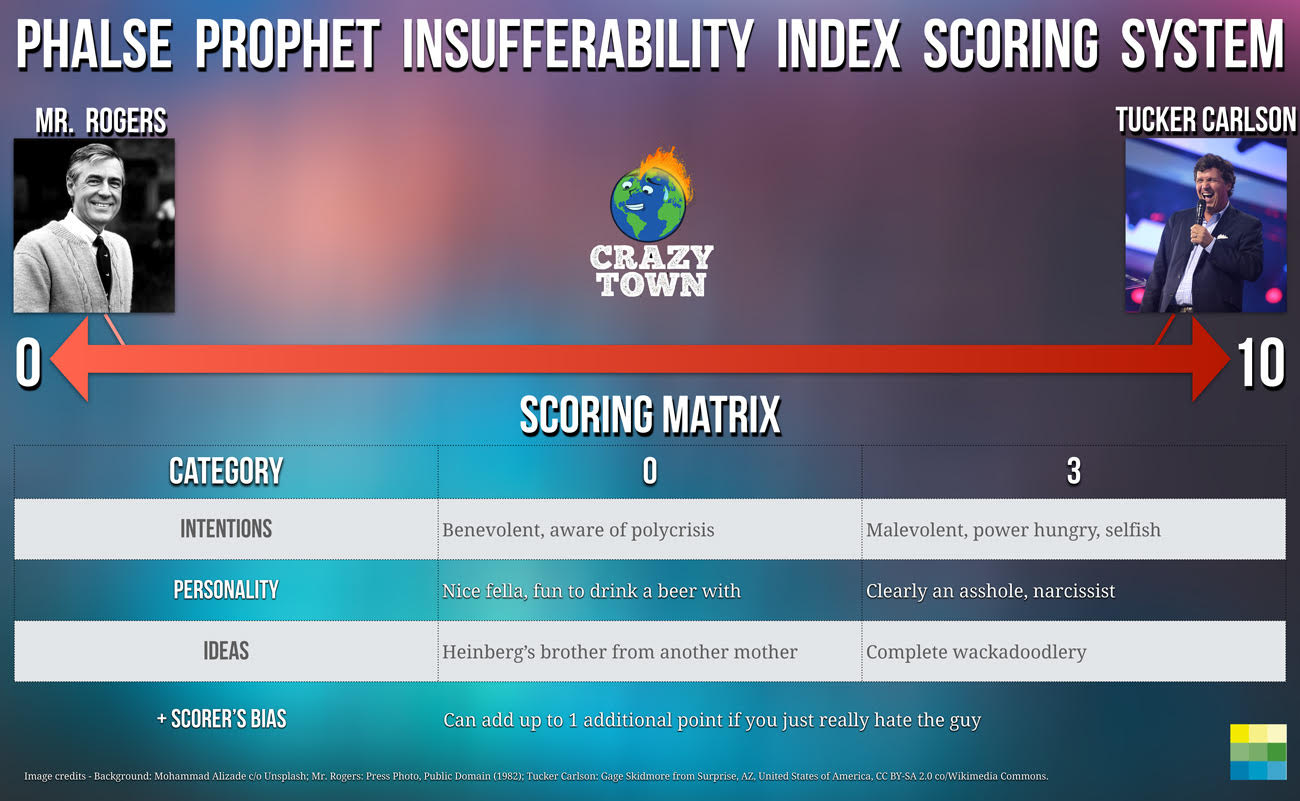

How would you rate this episode’s Phalse Prophet on the Insufferability Index? Tell us in the comments below!

It’s time for the annual Crazy Town Hall! This exclusive webinar on June 6, 2023 is our way of thanking listeners who support the show financially. We hope you’ll join us!

Episode Sponsor

Transcript

Jason Bradford I'm Jason Bradford. Asher Miller I’m Asher Miller. Rob Dietz And I'm Rob Dietz. Welcome to Crazy Town, where we can't wait to meet our 80 trillion descendants on the other side of the cosmic wormhole. Melody Allison Hi, This is Crazy Town producer Melody Allison. Thanks for listening. Here in season five, we’re exploring Phalse Prophets and the dangerous messages they’re so intent on spreading. If you like what you’re hearing, please let some friends know about this episode, or the podcast in general. Now on to the show. Asher Miller Hey Jason, I gotta ask before we start today, when I was pulling up in front of your house, I noticed, you have all these excavators out in the field? Jason Bradford Yes, I do. Asher Miller Like a dozen of them. Jason Bradford Yep. Big deal. Asher Miller What's going on, man? Jason Bradford Well, you know, there's a lot of stuff going wrong in the world, or potentially wrong. What we call existential risks, you know. The gray goo AI kind of stuff, pandemic, nuclear war, all kinds of things. And if we're going to basically fulfill our potential as beings in the universe, we've got to make sure that if civilization goes under, they can come back. Rob Dietz That's good thinking. Asher Miller This is insurance? Jason Bradford Yeah. A lot of people think that either you're gonna go hide out in a bunker and be able to come out and re-boot civilization. But no way. Unless you're a hunter gatherer or a subsistence farmer, us moderns don't have the skills. So what I'm doing is I'm creating basically bunkers for groups of subsistence farmers and hunter gatherers that I'm going to import from various parts of the world. I'm not going to just get a single group of hunter gatherers or a single group of peasants. I'm mixing them together. We want that diversity. And they're going to live here. Asher Miller So not a monoculture of hunter gatherers. Jason Bradford No I'm not. I'm bringing in - Actually, I've got some New Guineans lined up. Some recently contacted tribes from the Amazon. I'm working on getting some Sentinelese from the Bay of Bengal. That's really tough. They try to kill you when you land. And I've got Andean peasants. I've got some Romanians. We're getting some Amish in here. So really, really solid crews, and they're going to be on 12 hour shifts. Asher Miller I was just thinking that you're doing rotational farming, basically? Jason Bradford Well, if things go wrong, you know, if the asteroid strikes, whatever, we need half of them at least in the bunkers at any one time, right? But they also have to come out to maintain their skills, get the vitamin D, etc. So I feel like I'm fulfilling my potential by doing for, you know, humanity, and the future generations. Rob Dietz Okay, I'm getting close to getting sick here. I'm near the vomit point. So I gotta call a little timeout on this - What do we even call it? A sales pitch? Jason Bradford Yep, this is legendary. This is going to be huge. Rob Dietz Well, let's just say for now that this is not Jason's cockamamie idea. He's making fun of a cockamamie idea that we're going to get to in a little bit. But in order to get there, we got to tell you about a guy named William MacAskill. Okay? He's our phalse prophet for today. And let me just start running through a little bit of his life to say, get a sense of him. And then we'll move on to the cockamamie ideas. Jason Bradford I was kind of getting excited about it, actually. Rob Dietz Yeah, I know. I know. Sorry. Okay, so McCaskill is probably the youngest of our phalse prophets so far. Jason Bradford Congratulations. Asher Miller What is he, 12? Rob Dietz Yeah, yeah. 11. No, he was born in 1987 in Scotland. His actual name when he was born was William Crouch, but - I don't know why that's funny. Asher Miller I just imagined he was crouching. Jason Bradford He was stooping a little. Asher Miller William, crouch! Rob Dietz Oh, it's a sentence. I gotcha. Asher Miller You gotta do it with a Scottish accent. William, crouch! Rob Dietz I thought we decided no more accents. After the Bill Clinton episode we had to put that away. Asher Miller You say this now. You're the one who gets to do all of the accents. Jason Bradford Yeah, I know. Only you can do accents. Rob Dietz Oh, no. This show is off to a bad start. Okay, back to William Crouch/MacAskill. He actually was kind of a progressive guy. So he and his partner both changed their last names when they got married. So that's how he ended up being William MacAskill. Asher Miller I gotta say I appreciate going with a new name versus the hyphenation thing. That gets long. Rob Dietz Yeah, it can get tedious. Yeah. Well, and so I think he had a pretty kind heart. When he was 15, he learned about the scope of people who are dying from the HIV epidemic and he kind of made this resolution, when I get older, I'm gonna make a ton of money so that I can give it away and become a philanthropist. So he went to Cambridge. So I guess he's got the academic chops. He studied philosophy. And he got impacted by this guy, Peter Singer, who wrote a pretty famous, influential essay called "Famine, Affluence, and Morality." And we're gonna get more into that later. And then he goes on to get his PhD in philosophy. Yes, that's a Doctor of Philosophy in philosophy. And now he's an associate professor at Oxford, and he's the chair of the advisory board of the Global Priorities Institute. Pretty highfalutin. Jason Bradford We've been making fun of a lot of the Ivy League schools in the U.S. I'm so happy we get to - Asher Miller Yeah, we get to make fun of the Brits. Jason Bradford I know the Brits. Like Cambridge, Oxford. This is great. Rob Dietz The true Ivy right? Jason Bradford Oh yeah. This is old school Ivy. This is great. Rob Dietz I mean, the the buildings were probably actually made of ivy at this point. Jason Bradford They were cloaked in ivy. Okay, so he's founded a few organizations, including the Centre for Effective Altruism. If you look it up it's spelled "t-r-e." Just be ready. Asher Miller Yeah, all about British - Jason Bradford Oh yeah, yeah. It's confusing. It argues for bringing database rational decision making to philanthropy. Rob Dietz Well, it sounds alright. Jason Bradford Yeah, a group called, Giving What We Can. And this is sort of like tithing your earnings to effective charities. Rob Dietz Sounds alright too. Jason Bradford Yeah. 80,000 hours, which is sort of the ideas that represents how many hours you're likely to work in a professional capacity. And so it is advising people on how to think through career choices that have the greatest social impact. Rob Dietz That sounds alright too. Jason Bradford I know, this guy's a star. He's a vocal supporter of animal welfare. This led him into vegetarianism. Nice. Live by your principles. He's best known, however, for being the leading voice of what is known as Longtermism. And he laid out what this means, his philosophy, in a very recent a apparently best selling book called, "What we Owe the Future: A Million Year View. And I remember getting ads like this like crazy through New York Times and stuff. I mean, this was really, really pushed. And it's this longtermism that we are sort of bringing up, and he's representing that as our phalse prophet. Rob Dietz If we were to just stop here, you'd have to say, "You know what? Maybe this guy is an actual prophet instead of a phalse prophet." Jason Bradford What's not to love, right? Yeah. Yeah. But we hinted at that with my fantasy about the bunker. Rob Dietz So it was a fantasy then? We're clear on this now. Asher Miller Some strange fantasies. So our Crazy Town devotees who listen to every one of our episodes may recall that I did an interview with Douglas Rushkoff, and we talked a little bit about the longtermism stuff. But we're going to really unpack that here today, and talk about why it's such a dangerous philosophy. So we're going to focus on longtermism, but, and this is - Part of what's really tricky about it is I think we have to place it in the context of a lot of other concepts. And that's partly because it helps people sort of like understand we're dealing with. And also because some of these other terms are also commonly used, or they're sometimes used interchangeably. They're used in different contexts. But what's important about them is that they're all connected sort of in an overarching philosophy, right? So the key thing is, if you hear any of these terms - It's not only understanding, like we want to present some of these concepts so you understand where longtermism comes from, but for your ears to be tuned to these terms that you might hear. Because then you'll know, "Oh, this represents this certain type of thinking." Rob Dietz Alright, if we're gonna start throwing around terms, can I just make the request that we don't use the word ontology or ontological at any time during this? Jason Bradford I was not planning on it, but you just did that. Asher Miller You actually did do that. Jason Bradford Okay? Okay, so someone who's really helped us understand how these are all connected, how these terms are conneceted, is Emile Torres, one of the most vocal critics of Effective Altruism and longtermism. And Emile is a reformed longtermist who actually had been a research fellow for our previous phalse prophet, Ray Kurzweil. Rob Dietz Wow. So Emile has escaped from the cults. Jason Bradford Yes. And Emile goes by they, and they have an actual acronym for this mishmash of all these related philosophies and concepts. Jason Bradford It's B-U-L-L-S-H-I-T. Easy to remember. Jason Bradford Okay, that's the easiest to remember. Unfortunately, this one's not as easy but - It's what's called TESCREAL. Okay? It sounds like something you'd push out. Jason Bradford It sounds like a hormone. Rob Dietz Some sort of disease, maybe. I went down to the public bathhouse and came away with TESCREAL. Jason Bradford And now you're on antibiotics. Okay, well it stands for - I might have some trouble with these words so I need you to step in if I flounder. Rob Dietz Okay, yeah. You almost said an anachronism for acronym. Asher Miller Well because there's so many "isms" in this acronym. Jason Bradford Oh my god. This is ridiculous. Are you ready for all the isming? Rob Dietz Yeah, okay. Jason Bradford Okay, we got transhumanism. Jason Bradford We’ve got extropianism. We've got, oh this one is going to be rough, singularitarianism. We've got cosmism. We've got rationalism, Effective Altruism, and longtermism. So that's what TESCREAL all stands for. Asher Miller Okay. Rob Dietz Right. I'm gonna say that's a lot. So, I don't think we've got to cover what every single one of those means. Asher Miller We're just gonna do an episode on each one. Rob Dietz We want to get to longtermism, but like you said earlier, Asher, we've got to set some context here. Jason Bradford And a few of them, they are so related that it kind of covers the bases. Rob Dietz So, why don't we hit unilater - I've got Jason disease. No. Utilitarianism, Transhumanism, Effective Altruism, existential risk, and then we'll be safely arriving at our destination of longtermism. Jason Bradford Okay, and some of these are not exactly in TESCREAL, but they're related important ideas in lots of these, so. . . Asher Miller Alright. Well, let's start with utilitarianism. And I think, let's go in a certain order, because I think the order is almost like these are building blocks of concepts. So utilitarianism is probably the oldest of these. And it's a philosophy that dates back to Jeremy Bentham snd John Stuart Mill, you know, the late 18th century, early 19th century. And basically, all you need to know about utilitarianism is that it argues that the most ethical choice you can make is the one that will produce the greatest good for the greatest number of people. Okay? Pretty basic. Jason Bradford Pretty basic. Rob Dietz Pretty easy to agree with. I mean, just generally. Rob Dietz For the most part. Rob Dietz In general. Jason Bradford You know, devil’s in the details, maybe of like, who decides and how do you decide and - Asher Miller Ah, that's what we're gonna be getting into later. Jason Bradford Okay. Alright, then we're gonna go to my favorite, transhumanism. According to Emile Torres, transhumanism is, and I quote, "Ideology that sees humanity as a work in progress. It is something that we can and should actively reengineer using advanced technologies like brain implants, which could connect our brains to the internet, and genetic engineering, which can enable us to create super smart designer babies." So we cover this a lot with Ray Kurzweil. Rob Dietz I've also seen a lot of sci-fi, and it usually doesn't end well. Jason Bradford Yeah, usually not really. Asher Miller It's interesting, the first part of that, humanity is a work in progress, something that we should actively, you know, re-engineer. Like, okay. Took a little turn there. Jason Bradford Some red flags start waving in the wind here. Asher Miller Alright, let's talk about Effective Altruism, because I think Effective Altruism is really probably the most commonly used term to sort of define this space of philosophy and initiatives that people are working on. It's something that is really intrinsic, I think, to our phalse prophet MacAskill. So, according to the Center for Effective Altruism, which is something again, that Jason, you talked about MacAskill co-founded. Effective Altruism is quote, "About using evidence and reason to figure out how to benefit others as much as possible, and taking action on that basis. Jason Bradford Yeah, sounds good. Asher Miller So you can see how it builds off of utilitarianism. Jason Bradford Love it. Rob Dietz Agree. Asher Miller It promotes using data and dispassionate reason to identify what giving would have the greatest impact, right. So you see, and this is actually pretty common in philanthropy, where people like to use metrics and you know, different ways -Where you're not just being tugged by heartstrings, or whatever. You're trying to think about the greatest impact you can make. Okay/ And it comes from the Australian moral philosopher, Peter Singer. You know, you talked, I think you mentioned him, Rob, when you were talking about the influences on William MacAskill. He's commonly viewed as the founder of EA when he published that paper in 1972, "Famine, Affluence, and Morality." And basically, he wrote it in response to this humanitarian crisis that was happening in East Bengal where there were many people that are suffering from famine, huge humanitarian crisis that was happening there. And he was arguing that there was insufficient aid coming from wealthy nations looking at this situation. So in the paper, he argued, quote, "If it is in our power to prevent something bad from happening without thereby sacrificing anything of comparable moral importance, we ought morally to do it." So basically, like, if it's not going to create an equally bad outcome consequence, it's our moral responsibility to help, right. And he also argued that quote, "If we accept any principle of impartiality, universal ability, equality or whatever, we cannot discriminate against someone merely because he is far away from us, or we are far away from him." Right? So he's saying, let's say the life of a child in East Bengal is worth the equivalent of the life of someone in your neighborhood. Rob Dietz Well, let the distortions begin now, shall we. If he's the kind of originator of this philosophy, it's started to take a turn for the worse. And this is one of the core ideas behind modern day Effective Altruism philosophy is that you got to earn to give. So it kind of goes back to MacAskill's youth of I want to grow up to be kind of philanthropist, right? Jason Bradford I know. It's amazing. Rob Dietz And you have this sort of rationale that whatever your job is, just go out and do it and get as much money as you can so that you can then become an effective altruist. Jason Bradford I think they also do say like, they asked a question, I saw this in some of Nick Bostrom writings, we'll talk about him a little bit. That, of course, you don't necessarily want to be engaged in like gun running or something like that. Jason Bradford There are limits. Jason Bradford There are limits. Right. Rob Dietz But there's kind of an irony here, because what you just said, Asher, of the life of a child in one part of the world is equivalent to the life of a child in your neighborhood. This kind of rationale is sort of doing this weird time thing where it's saying, the suffering of people today is not worth thinking about when you're talking about the potential well-being of all these future people. Asher Miller That will get us straight into longtermism, which we'll get to in a second. One thing I wanted to say about this earn to give philosophy, I've encountered this quite a bit in my own life. Asher Miller Yeah. So after college, I went and I worked at the Shoah Foundation, where we were documenting stories of Holocaust survivors. I didn't necessarily think I was going to be somebody who dedicated my career to doing nonprofit work. At the time, I wanted to be a writer, right. And I was going to write this book about a family of Holocaust survivors I was really close to. And in the process of preparing for that, I was writing a book for another Holocaust survivor. I was burned out. And I was living in Europe with my future wife, and then came back to the United States. I was like, what am I going to do now? And I was lucky enough to get interviews with folks, some really interesting people that that my dad was connected to in the Bay Area. And I remember meeting with this one guy, and you know, he was just kind of like asking me, he did a favor to my dad talking to me in my mid 20s about what I wanted to do. And I was like, you know, the .com boom was in effect at the time. Jason Bradford Really? Jason Bradford Oh, yeah. Asher Miller And I was like, I really want to do well. But I really want to do something meaningful and worthwhile. And basically, this guy, I'll never forget him. He like a VC guy, venture capitalist guy. He's like, "That's fucking stupid." He's like, "That's ridiculous. Make your money now and then you can give it away later. That's a way more effective way of helping the world." You know, he like just literally told me I was a fucking idiot. Jason Bradford For even imagining. Just go make piles of money. Asher Miller Go make your money. Rob Dietz I love it too, because he's also putting that message out with just sell your soul. You know, go do some work that you don't give a shit about as long as the piles of money are rolling in. Asher Miller And he was completely convinced that that was the right thing to do. Jason Bradford Okay, okay. Well, alright. So the other important thing to look at with this is what's called existential risks. And I remember when I first actually started learning about this whole philosophy, TESCREAL whatever, through the lens of existential risks. I remember being just struck by the fact that these folks in Oxford were like publishing about existential risk. Like wow, that's cool. You know? Asher Miller Because a lot of our work is about risks too, right? Jason Bradford I know, I'm like, Oh my God. We've got well funded Oxfordians is out there doing stuff. This is fantastic. So Nick Bostrom, also at Oxford, MacAskill's colleague. You know, he's also considered to be one of these fathers of longtermism. Introduced the concept of existential risk in 2002. And, I mean, of course, people have been worried about these kinds of things for a long time. Asher Miller Sure. We've been aware about nuclear, you know, nuclear holocaust for a long time. Jason Bradford Exactly. But he kind of brought it forward and started defining it and bringing in all the potential lists of things that could go wrong. And so he defines it as an adverse outcome that would either "Annihilate Earth originating intelligent life or permanently and drastically curtail its potential. An existential risk is one where humankind as a whole is imperiled. Existential disasters have major adverse consequences for the course of human civilization for all time to come." And that's a key thing, for all time to come. And so he lists top existential threats. And you know, I agree with a lot of these. Nuclear holocaust or runaway climate change. Then it gets a little esoteric and a little bit more hard to kind of relate to you. For example, misguided world government, or another static social equilibrium that stops technological progress. So this is interesting. This is a hint. This is telling. Anything that ends up stopping technical progress, or some - Asher Miller Is an existential risk? Jason Bradford Yes. Or a world government that doesn't allow medical progress to happen. Rob Dietz Doesn't even consider the potential existential threat from overdoing it on the technology. Jason Bradford Well, this is the irony we'll get into, okay. And also, what he calls technological arrests. And this is that if for some reason we can't overcome the technical needs we have to transition to a post human world. Alright? So they pretty much make explicit that they're dealing now with what you would call like this - What was it? The singulatarianism? Asher Miller Right. Okay, so let's jut look at these building blocks, right? Utilitarianism, transhumanism, Effective Altruism, existential risks. You know, that brings us to longtermism. And we're gonna unpack this more in a minute. But basically, the essential thing to know is that longtermism is used for valuing all potential human lives in the future. Asher Miller Yeah. So any potential life, right? And look, we know it's a confusing bit of word salad, TESCREAL. We'll see if that takes off. But again, I think the key thing here is that these are all terms of philosophical beliefs that that are used interchangeably. And they're part of this sort of collective worldview that is becoming increasingly influential. Jason Bradford Yeah. Asher Miller So basically, you're advocating whenever any one of those isms is mentioned the little alarm bell starts to go off. Asher Miller Your antennae should go off. Asher Miller "This is TESCREAL. This is TESCREAL." Asher Miller Yeah. So what is longtermism really? I guess I would summarize it like this. It takes utilitarianism right, which is the moral argument for prioritizing the greatest sum of well being. Do the thing that does the most good. And it combines that with Singer's, Effective Altruism, which is like, we can't prioritize our own interests, or just those that people were familiar with over complete strangers. And it basically puts it into like, the space time continuum, right? With a little dash of the singularity thrown in. Jason Bradford Yes, that's a great summary of this stuff. Rob Dietz Yeah. Can I ask you, Jason, resident biologist? Is that even a thing where you could value some other life out there more than say, yourself or your kin? Jason Bradford It's hard to do. Asher Miller Well, it's not more. It's equal, right? Jason Bradford Well, that's the thing. If you think about everything that we think of normal from an evolutionary point of view suggests that this is kind of impossible from a root level of, I mean, you can probably, in your head conceptualize this, but I think it's very hard to do in practice. And we were talking about, you know, if there's a burning building, and your kid is in it, you're willing to run into that building, right? But if it's not your kid, what are the odds you're just gonna run into that building? Rob Dietz Some of us will. Jason Bradford But it's very hard. And so - Asher Miller I will just say, though, I mean, I think Singer wrote about that, in his article that he wrote in 1971, and he was basically saying, we live in a global world now. We actually have agency to do something. And he was basically saying, look, if we have surplus, we should use it to help other people. So you could sort of see the logic of it, rational logic. As long as we're, and this is the key thing, as long as we act rationally, which brings us in a sense back to Pinker, right? Like hey, if we can all be rational and we've got you know, the spirit capacity, then we can do all these things. Rob Dietz And not to confuse everybody with too many names, but our beef is not with Singer, right. I mean, it's more the distortions that have occurred since he wrote that article. And you know, with longtermism, it argues for starters, don't discount the fate of people on the other side of the planet. Well, that's right in line with Singer so that's fine. Right? But then it goes deeper and says we shouldn't discount future lives, which also is pretty okay until you take it to some absurd level. Jason Bradford I think we gotta call back to - we had a whole in season, what was it, Season 3: Hidden Drivers? Rob Dietz Yeah. Jason Bradford We had a whole episode about discounting the future in episode 37 of Crazy Town. So anyway, I think in many ways, we're like, yeah, okay. We agree. Rob Dietz Yeah, even hitting the technical side, the discount rate and how basically, our money system discounts the future. And we're saying no, you can't discount the future. Asher Miller It's interesting with some of these philosophies and these terms. When you first heard about existential risks, you're like, oh, they're probably talking about the same thing I am worried about, you know, And we hear longtermism and we're like, yeah, we agree. We shouldn't discount the future. We should be concerned about future generations. Rob Dietz Don't just take it as quarterly returns, you know. Don't just focus on the thing that's right in front of you. Jason Bradford Okay. So here's where they remember, we kind of hinted that the devils in the details with a lot of this stuff. Okay. So MacAskill And he's got this recent book we mentioned. And in that he goes through some math. He says, "If we assume that our population continues at its current size - " Okay, so 8 billion or whatever - "And we last as long as typical mammals that would mean there would be 80 trillion people yet to come. Future people would outnumber us 10,000 to one." So that's where you start to go, okay, right. I can see that conceptually. But now you're like, we're going to value those 10,000 futures as much as the present. Rob Dietz And that's where he starts saying that every action we take right now in the present should be for the sole purpose of ensuring the existence and the wellbeing of that 80 trillion, or however many it is in the future. And even if that means sacrificing wellbeing and the lives of people today, such as the 1.3 billion people that are living in poverty and suffering today. It's just the math, right? You just look at the numbers. Who cares? Usually you would say, let the one sacrifice for the many. I guess this would be, let the 1.3 billion sacrifice for the 80 trillion. Asher Miller I'm sure that goes over well. Rob Dietz Yeah, as long as you're not in the 1.3 - If you're at Oxford, you're safe. Okay? Asher Miller Now, and this is where it brings it back to existential risks, right? So if we have to say, look, the simple math says there's gonna be 10,000 people in the future for every one of us alive today. Then we have to do everything in our power to prevent the risks to their existence happening. And so we've got to have all kinds of investments and focus on trying to prevent some of these risks. But of course, you know, sometimes we need to have a backup plan, which is, Jason, you were talking about, you're invested in some of these backup plans with what you're doing here on the property. I think you got that idea from one of MacAskill's colleagues, Robin Hanson. Jason Bradford Yes, very good guy. Asher Miller He wrote, and I'm quoting here, "That it might make sense to stock a refuge or a bunker with real hunter gatherers and subsistence farmers together with the tools they find useful. Jason Bradford Buying those. Asher Miller Of course, such people would need to be disciplined enough to wait peacefully in the refuge until the time to emerge was right. So perhaps such people could be rotated periodically from a well protected region where they practice simple lifestyles so they could keep their skills fresh. Now, you're bringing them here and rotating them on the farm. Well, Jason Bradford Well, that's my upgrade from this. I took his idea and - Asher Miller Well, the carbon emissions of flying them back and forth from Amazon or the Bay of Bengal . . . Jason Bradford Exactly. Exactly. Rob Dietz Yeah, I'm sorry. Like what kind of convoluted thinking to get - I'm pulling a one you two guys. I'm about to have an aneurysm here. Like what hunter gatherer or farmer signs up for this shit? Like, yeah, I'm gonna sit in your bunker waiting for your Holocaust to happen so that when it does, I can come out and teach you how to live again. Jason Bradford Okay, if there any longtermist billionaire effective altruists out there, just don't listen to Rob. Please DM me about funding this project. Alright? You'll find me no problem. Rob Dietz I'm so sad. Jason Bradford Alright. So there is tons of money being thrown at Effective Altruism And longterism. MacAskill's over 80,000 hours claimed in 2021 that 46 billion was committed to further the field. Rob Dietz That's that's roughly, what? Two times the annual budget of Post Carbon Institute. Jason Bradford And I only need a few 100 million for my project. Okay? I'm just letting you know, okay. Elon Musk has given 10's of millions to support Bostrom's work and others. And most infamously, you got this guy, you know, Sam Bankman-Fried. He actually worked at the Center for Effective Altruism and was very closely tied to MacAskill. And maybe you can explain what happened? Asher Miller Well, he's thrown a lot of money at Effective Altruism in different ways. He also robbed people blind. And then his company FTX went belly up. Rob Dietz All the money he threw was cryptocurrency which probably is now nothing, right? Asher Miller Well, but people had invested real wealth in this. Jason Bradford There's a lot of overhead in all that. Rob Dietz Well, now you wonder how much the Effective Altruists - Maybe this is good for our story if we're trying to battle this philosophy. Maybe they don't have quite as much money. Jason Bradford Maybe now they're down to 20 billion. Rob Dietz Imagine they could only have a 10th as much and they're still bazillions of dollars beyond where we are. Jason Bradford I'll just cut the number of hunter gatherers I'm going to bring in half. Okay? Asher Miller Alright. But I would say actually the sort of effective altruists community, if you want to call it that, has been following a little bit of the Powell memo playbook. We talked about this before in our last season. You know, talking about the Powell memo, for folks haven't listened to it, that basically laid out a strategy for neoliberalist advocates to basically change things on all sorts of levels of society that was affecting higher education, the media politics - Rob Dietz - The coordination of think tanks. Just basically getting the message out any way they could. Asher Miller And you actually could see that happening. I mean, I don't know, maybe there'll be expos days later about this being sort of coordinated, you know, on some level. But there's certainly been that kind of a spread of long term missed ideas. And Effective Altruism is you know, you talked about money that Musk has thrown to Bostrom. Millions of dollars going to Oxford University and these other institutions. You're saying Bankman-Fried was throwing a ton of money into the recent congressional elections and local elections. And he was specifically funding, I mean, he was funding people that are gonna basically change the rules or protect cryptocurrency from being regulated. But he was also funding actual sort of Effective Altruist longtermist candidates. There was actually one that ran here in Oregon. He was the first sort of Effective Altruism congressional candidate. He got like, you know, I think he had $11 million. I don't know how many of that came from Sam Bankman-Fried. And that guy worked briefly at Future of Humanity Institute, which is Bostrom's shop. But you have the head of the RAND Corporation, which is one of the most influential kind of think tanks, big think tanks in the country. He's a longtermlist. You've got longtermists writing reports for the United Nations. So there's a report in September 2021, called Our Common Agenda and it explicitly uses longtermist language and concepts in it. You have people in the media who have been really promoting longtermism. Good example of that is Sam Harris, who's a very famous well-known author and podcaster. He wrote the foreword to MacAskill's book, which you talked about. Jason Bradford He interviewed MacAskill quite a bit on his podcast, yeah. Asher Miller And he, you know, said to MacAskill, he said, quote, "No living philosopher has had a greater impact on his own thinking and his ethics." We could talk about the ethics of some of these guys, later, maybe, but - Rob Dietz Unbelievable money and influence and reach. Melody Allison How would you like to hang out with Asher, Rob, and Jason? Well, your chance is coming up at the 4th annual Crazy Town Hall. The town hall is our most fun event of the year, where you can ask questions, play games, get insider information on the podcast and share plenty of laughs. It's a special online event for the most dedicated Crazy Townies out there. And it's coming up on June 6, 2023 from 10:00 to 11:15am US Pacific Time. To get an invite, make a donation of any size, go to postcarbon.org/supportcrazytown. When you make a donation, we'll email you an exclusive link to join the Crazy Town hall. If we get enough donations, maybe we can finally hire some decent hosts. Join us at the Crazy Town hall on June 6, 2023. Again, to get your invitation go to post carbon.org/supportcrazytown. Jason Bradford Alright. I am really happy to talk about this species. This is one of my favorite species, Homo machinohomo. Also known as the Cyborgian. Rob Dietz Ah, this was our second one. Jason Bradford Yeah. Very different than the type specimen, Ray Kurzweil. But, you know, they both sort of believe in this transhumanism singularity type thing or the future of these high tech, you know, fusing with technology cyborgs. MacAskill is, he's not the techie guy, he's a philosophy guy. So you see how even within a species there can be great variance in form. Like imagine if you're an alien spacecraft and you're hovering over say, Albuquerque, New Mexico. Rob Dietz I used to live there. Jason Bradford Yeah. And you suck up a chihuahua and you suck up a great dane. Rob Dietz Used to see that all the time on the streets. Jason Bradford Exactly. At first you might have you might not have any clue that the same species are so different. Right? But within that detailed taxonomic work you can tease these things more. Asher Miller Which one is Kurzweil? The chihuahua or the great dane? Jason Bradford But I just want to explain a little bit about the evolution in the discussion section of my paper. I go into some detail - Rob Dietz This is where we realize that you're sad that you didn't really go the professorial route and you became a farmer. This is your chance. Jason Bradford This is my time. If any University is out there, maybe Oxford or Cambridge. Jason Bradford There's Singularity University. Rob Dietz What about the Jack Welch Institute of Management? Asher Miller So many options to choose from. Jason Bradford So many options. Anyway, you know, we have this species that we covered a lot called the double downer, right? Now what you're seeing is there's a lot of double downism in all these other species like the Cyborgians. They also kind of have double downing in them, but I consider them an offshoot of the double downer. Asher Miller Yeah, isn't double downers like an ancestor? Jason Bradford Yes. It is a paraphyletic species, probably the - Asher Miller Do they have a common ancestor? Jason Bradford - The mother species spinning off daughter species. Such as, Rocket Man, which we'll get to later in the season. The Industrial Breatharians, the Cyborgians, and Complexifixers. So these species - Rob Dietz What are we talking about here? Jason Bradford Oh, well, okay. Okay. I think you know, what you have is this sort of technology fetish that they all possess. But then these other species have particular niches. You can think if there's a species that's widespread in the lowlands, and then there's daughter species that kind of move up into the mountains and occupy different mountain tops. That's what I think is going on. But we're gonna need a lot more population genetics. Asher Miller What a beautiful world this is. What I actually think is - you remember that game PokemonGo? Jason Bradford Yeah. Asher Miller We need a PokemonGo to spot these phalse prophets. Jason Bradford I think that would be great. Asher Miller You could just like hold up your camera and be like, "Oh, what species is this dude? Rob Dietz Personally, I'd rather just go back to chihuahuas and great danes. Jason Bradford Okay, it's quite obvious by now that longtermism and all of the related TESCRAL beliefs are built on pretty insane assumptions about technological process, exponential growth, no limits to - But we've covered that before. And so we're not going to go into any detailed critique of that. Rob Dietz Yeah, but what I do want to go into a critique of is this rich versus poor, sort of this classist thing that's gotten built into the philosophy. And the easiest place to pick that apart is from one of MacAskill's colleagues, a guy named Nick Beckstead. And here's a quote I'm just gonna read you guys: "Saving lives in poor countries may have significantly smaller ripple effects than saving and improving lives in rich countries. It now seems more plausible to me that saving a life in a rich country is substantially more important than saving a life in a poor country, other things being equal. Richer countries have substantially more innovation and their workers are much more economically productive." That guy, Singer, talk about a perversion of what he was saying. It's like the opposite. Rob Dietz This is why longtermism really fucks with people's heads because it takes the Effective Altruism and says well, we have to consider trillions of people in the future. In which case, let's invest in the people now that have the most capacity to ensure that those, whatever, we can go colonize space. Rob Dietz Well that's how you get some jackass thinking let me import some of the poor people to live in Jason's bunker. Jason Bradford Exactly. We can't get rid of all the primitives or totally dismiss them because they are optionality in case - Asher Miller They are our backup. Jason Bradford They're our backup. Asher Miller They're our reboot, right? Jason Bradford They'e a reboot of civ. Asher Miller There's a generator in the garage. Jason Bradford There we go. Okay. Let's not forget about the bunkers we need with these people. Rob Dietz I'm sad again. Very sad again. But before we jump into that, can I just pat myself on the back for not making a joke after you said dark shit? Asher Miller Sure. You just had to point it out. Rob Dietz Sorry. My bad. My bad. I cant help it. It's a disease. Asher Miller We could go down a seriously dark rabbit hole like getting into some of the philosophy. Like what you just talked about Rob, like that quote that you read from Beckstead. Pretty fucking reprehensible. There's some shit here on the transhumanism front that is really to me quite disturbing. And I'll just give sort of one example of this. In a 2014 paper Bostrom - So Nick Bostrom, just to recall is another Oxford guy. I think highly influential on our guide MacAskill. He and a co-author of his name Karl Schulman proposed a process of engineering humans to achieve an IQ gain of up to 130 points by screening 10 embryos for desirable traits, selecting the best out of the 10 while destroying the other nine, of course, and then repeating the selection process 10 times over. This human would be so much more intelligent than we are that they would be an entirely new species. They would be a post-human, right? Asher Miller Yeah. And then of course, we become Cyborgians eventually right? You know, so that's just the first step. Rob Dietz Well, and that first one that they create in the lab would be like Lex Luthor. Asher Miller Right. It's just like, yeah, I mean, that is definitely like straight up eugenics stuff. Rob Dietz Continuing on this front, the whole idea of longtermism is to promote taking high risks in the now because the upside is so great in the future. And it so much outweighs the downside of taking those risks. And that's exactly how you get a Sam Bank-Fried. He's gonna go off and make as much money as he can. He sees this crypto thing going and he's like, "Yeah, I can take advantage of this and I can take some risks. And off and running. And he's like a poster child for this. Asher Miller But they use this philosophy to justify. To say that actually, this is for the greatest good. Jason Bradford Yes, 80,000 hours. Asher Miller Because we're going to make as much fucking money as we can right now and we're going to use that all for the purposes of ensuring these 80 trillion people, you know, in the future can have the best lives they can. You know, there's another infamous character, someone that that worked very closely with Sam Bankman-Fried. I think they even had a relationship of some kind named Caroline Ellison. She actually was the chief executive of Alameda research, which was like that sister company that was connected to FTX. She once wrote, this has since been deleted, but I think this is really interesting in terms of the logic here. She wrote, "Is it infinitely good to do double or nothing coin flips forever? Well, sort of, because your upside is unbounded and your downside is bounded at your entire net worth. But most people don't do this" she wrote. So basically she's saying, yeah, you know, keep doubling it. Flip the coin, try to double it. Take the risk. Rob Dietz It's like going to Vegas And playing blackjack. And when you lose, you just put twice the bet of what you lost, right? Jason Bradford Roulette. Roulette is better. Rob Dietz Or any of them, whatever. You lose, you just double it. And you keep doing it. Asher Miller But I mean, her saying okay, so the downside is you lost your initial quarter, or whatever it was, right? But the potential upside is so big. Jason Bradford But it's ridiculous. Asher Miller But she's like, people don't want to do this. And then she said, they don't really want to lose all their money. And then she wrote in parentheses, "Of course, those people are lame and not EAs," Asher Miller Effective Altruists. Asher Miller Effective Altruists. Asher Miller "This blog endorses double or nothing coin flips and high leverage," right? So if you think about the cavalier nature of that, like, these people are lame, you know. Well, they fucking profited off of these lame people who invested in FTX. They invested all their money, and they lost it, right? Rob Dietz It's so arrogant, too. It's like, I'm enlightened and you are not. Asher Miller And you gotta wonder, is she as Cavalier these days now? I mean, all that coin flipping that they were doing with FTX and Alameda like, might put her in jail, right? Jason Bradford I mean, the probability is you're gonna lose everything if you're just coin flipping. That's what is so crazy. I mean, the odds that you actually keep doubling forever are ridiculous. Rob Dietz Matt Damon told me that crypto was the space for the bold. If you're a red blooded American . . . Asher Miller But it is interesting. You pair that mindset of like, double down, doubled down, take risks, do all this stuff. Oh, but we also have these existential risks we gotta worry about. Jason Bradford We've got our bunkers. Rob Dietz Well, you are leading into the double down doctrine. Okay? Now bear with me here. There's some circular logic here. There's some teenager style rationalization going on. I apologize to our four teenage listeners out there. No offense meant. I was a teenager once. Asher Miller Still are in some ways. Rob Dietz I didn't get through the poop jokes. I know. We're still there. But this double down doctrine, you see it among the longtermlists, the Effective Altruist, the TESCREALists. I wish I could just name all those things in a row. It rolls off the tongue. But it's really weird. They're sort of saying if we want to fix the problems that are caused by human's exploitation of the world, then we've got to exploit even more. And that way we can address all the externalities caused by our exploitation. Jason Bradford You got through it. Rob Dietz Right. I mean, that's the earn to give thing, right? It's like, make as much money as you possibly can now and then use that later. Rob Dietz Yeah. Well, and a really good example of it, there's a really good Guardian article where the author is interviewing William MacAskill. And he says that McCaskill is kind of saying, look, rather than cut back on consumption - And I should say, MacAskill, he said, we're over consuming, economic growth can't go on forever. He understands the - Jason Bradford Climate change is a problem. Blah, blah, blah. Rob Dietz Yeah, he understands the exponential math. He's like, yeah, of course if we keep growing the economy at 3% a year, eventually we - Asher Miller You say he understands it, but then he also thinks that we're going to have 80 trillion humans. Asher Miller Just keep digging. Rob Dietz Yeah. So he says all that and then this is wat he says about consumption. He says, "Rather than cut back on consumption, it's much more effective to donate to causes that are dealing with the problems created by consumption." So you know, like a doctor instead of dealing with the cause, just keep putting band-aids on top. Jason Bradford This reminds me of Woody Tasch, the Slow Money founder. Rob Dietz Yeah, those two are exactly the same. Woody Tasch and William MacAskill Jason Bradford Well, he got so frustrated because he was working for the Ford Foundation, and they kept investing money in their endowment that was trashing the planet. And then they had to work on granting to fix it. Asher Miller Right. 95% gets invested in shit that makes everything worse And 5% you spend to try to fix the problem. Jason Bradford It's just not fair that 95% is winning all the time. Asher Miller That's the philanthropic model. Apparently it's the Effective Altruist's model too. Jason Bradford So this Oxford philosopher is not impressing me whatsoever. The other thing that keeps driving me crazy is this constant going into AI. They have a lot where like one of the most important jobs you can have is to invest in the safety of AI. The governance of AI. The prevention of AI from killing us all. Like, they're investing in how to protect ourselves from the AI that they need, so that we can create all these technologies, so that we can go post-human? Jason Bradford Well, they're saying, like, I'm really worried. I mean, you've got Musk saying this. All these people saying, basically, I'm really worried about AI. So we need to invest in AI. Rob Dietz Well, that's the that's the teenager part to me. It's like, I want to do this thing. I know it's dangerous. Let me find a reason why I should be able to do this thing. And it's this weird circular logic. Asher Miller I sometimes think that there's like, speaking of teenager hood, there's this desire to pursue whatever fucking instincts and impulse you have. But you also still kind of want a parent. Jason Bradford They want governance. Asher Miller They don't, but they do. Rob Dietz I wish instead of doing crypto adventures and all this crazy stuff, they were just throwing water balloons at people on the street like a regular teenager. Asher Miller That's not going to help us get to space and merge with technology in the singularity, Rob. Asher Miller Yeah, it is interesting to think about that the process of a good intention is being perverted on some level, right? Jason Bradford So I think this is a really interesting story how you start off with these well intentioned philosophies of like, Peter Singer, you know, is famous for animal liberation and being altruistic and giving and thinking about future generations. And it ends up becoming this sort of self serving, really juvenile rationalizations justifying your own work, your own sort of sense of power, and then basically relying on other wackadooley - Wackadoodlery? Like Kurzwelian wackadoodlery? To give you this reason to be doing all this just nutty stuff. And so it becomes also ironic that you have Singer who's this animal rights people and caring for other generations, and it becomes all centered around humans and our potential, and all the trillions of human lives ,and consciousness, and experience. Ah, God. Jason Bradford Yeah. I've been following the work of Sarah Pessin, who's a philosopher. Jason Bradford Another philosopher? Rob Dietz Does she have a Doctor of Philosophy in philosophy? Jason Bradford I think so. Asher Miller I'm sorry, I have to digress for a second. Do you recall watching Monty Python's Meaning of Life? Jason Bradford Oh my gosh. Oh I love that movie. Asher Miller There's a scene where this older couple is at this dinner in heaven, and they don't know what to talk about. And so the waiter gives them a topic to talk about which is philosophers. One of them was talking about like Schopenhauer and the other one, I can't remember, and the wife said, "Do all philosophers have S's in their name?" Like they are not capable of having a philosophical conversation. Rob Dietz It's reminiscent of us. Jason Bradford Well, look up Sarah. You can go to SarahPessin.com. And she has this really interesting section called Meaning Maps and Political Spiral Logics. And she talks about how in our society right now, there's all these good ideas that end up getting kind of corrupted. And she talks about this thing called the slipstream vector. So you imagine people with good intentions, and these philosophies sort of open up opportunities for someone to come in who just creates some extreme version that ends up being completely perverted. And this is happening a lot in our politics, actually. So the slipstream vector, I think. I see this happening in this movement. Rob Dietz Yeah, you just totally open the visual in my mind of pace lines in biking up. So you know like, when you're drafting behind somebody, you use like 30% less energy. Which is why in like the Tour de France, you see these huge mobs of cyclists right on each other's wheels. And yeah, so it's like, you know, there's Singer out in front, and then suddenly MacAskill and Bostrom get right on his wheel. Asher Miller So that is an interesting metaphor. I think we bring it back to William McCaskill, you could say, I don't know. I don't know the guy, but it seems like maybe that's his journey here. Which is like, it starts from a fairly innocent and maybe quite innocent or immature place, but well-intentioned. And then these things get their hooks in it. Do you know what I mean? So It's like, once you take Effective Altruism, and then you you add on this idea of not just like thinking about the long term and future generations, but you add this idea of human exceptionalism and the singularity. Jason Bradford Yes. Asher Miller And you start thinking about and you believe in this technological potential. It becomes this totally perverted thing. And then worse than that, you're talking about like, Singer in front And MacAskill and Bostrom, but then you got the guys like Sam Bankman-Fried and Elon Musk coming in. You're basically using this fucking thing, you know what I mean, to justify what they're doing. Back to that quote of like, it's better to invest in people in wealthy nations right now to do more long term good. Jason Bradford And they're completely willing to maybe push technology to the point where it kills us all off, and their backup plan is a bunker of hunter gatherers and peasants. Rob Dietz On plus side if we're sticking to the Tour de France metaphor here, Sam Bankman-Fried just got run by one of the support cars so we got that at least. Jason Bradford Okay, the insufferability index. Listeners, what is your score? We're gonna go through it here, but it's zero to 10. We've got intentions, personality, ideas, and then you get a bias. Does anyone want to go first? Asher Miller And high score of zero to three in each of those categories. Highest is is matched insufferable. Jason Bradford Yes, most insufferable. So how insufferable? Rob Dietz I'm happy to start. I think intentions for William MacAskill, I think he's getting a zero. I think he has really good intentions. From what I've read he's done some amazing projects trying to spread nets around places where mosquitoes are a problem to prevent malaria. I mean, really good altruistic instincts. Personality, I think he seems okay from what I've read. So he's getting a low score there too, as well. Ideas is where it gets a little bit iffy. For me, I think, again, earlier in our discussion I kept saying, "Well, that sounds okay." So some of the ideas are alright, it's just the way they've been perverted. So I think he's getting about a three for me. Jason Bradford Okay, pretty good. Asher Miller I'm gonna raise it by one. I'm gonna give him a one for personality. I'm not gonna go with zero because, you know, I don't really like people. Rob Dietz Yeah, there's no one. Asher Miller No one gets a zero. Rob Dietz Jason and I are twos in your book. Asher Miller My wife gets zero. If she heard this she'd be like, what are you talking about? Rob Dietz Yeah, I'm a 10, not a zero. Asher Miller The ideas, I'm gonna give him a two because it definitely goes into some wacky shit. But you know, there's some things like, you know, thinking about future generations that I agree with. But I'm gonna use my my scorer’s bias. I'm gonna add an extra point in there because there's some, he's kind of promoted some designer baby shit, too. You know, there's some stuff in there that's kind of dark. Yeah. I'm gonna go with a four. Jason Bradford Okay, okay. I think it’s really weird. I'm really disappointed in a so called Oxford philosopher. I'm no philosopher, I wouldn't consider myself a philosopher. Rob Dietz You are a Doctor of Philosophy. Jason Bradford In biology. Rob Dietz Yeah, those don't fit right. Jason Bradford Right. But I feel like he's got some such huge holes in this. Like the circularity of stuff. Like it pisses me off, right? Rob Dietz Well, you need to go to Oxford to understand this stuff. Jason Bradford Okay, anyway, I'm gonna go split the difference and add it up to a 3.5. Asher Miller Pretty low score. Jason Bradford Yeah, he got a pretty low score. Asher Miller Good job, William. Rob Dietz Let's call him up for beers. Jason Bradford He's young. He's got time to catch up. Asher Miller He's got time. George Costanza Every decision I've ever made in my entire life has been wrong. My life is the complete opposite of everything I want it to be. If every instinct you have is wrong, then the opposite would have to be right. Jason Bradford Alright, I really struggled with the do the opposite. Because I think these people so overthink it, And they just come up with stuff that just that no normal human would ever consider. I'm just like, the opposite might just be a normal frickin' human being. Asher Miller Well look, they have to come up with some ideas. They're the professors of philosophy. Jason Bradford They're living in their head and thinking of 80 trillion and like, billions of years in the future. And it just gets absolutely absurd. So just be normal. Just be a normal human being. And, also, okay, this is kind of weird, but you gotta be more present. Live more in the present. Like a goldfish. Okay? Not completely. You know, short-termism might be okay, sometimes. Asher Miller I'm gonna say we should, doing the opposite is maybe splitting the difference, right? Jason Bradford Right, right. Help balance it out. Asher Miller So it's not discounting the future. But It's also not discounting the present which is what longtermism has done. Rob Dietz Yeah, I think that's a really good point. Because if you go back to that episode we did in the hidden driver season about discounting the future, we kind of said, "Hey, stop doing that." And think about projects and things that you could do in this world that have a legacy. But kind of like with some limits on the horizon, right? We're not going to the sci-fi billions of years in the future on the other side of the wormhole. Jason Bradford And make sure that when you're doing those things, they also bring you joy in the here and now. I mean, you have to align those so you're never going to follow through. Asher Miller Yeah, so you know, maybe the whole seventh generation thing. Maybe that's a pretty good. . . Rob Dietz Yeah, in fact, there was an article on resilience.org by Christopher McDonald that was comparing the seven generations idea from the Iroquois Confederacy to this longtermism - Jason Bradford And he was excited about, oh longtermism is like seven generations. Rob Dietz Yeah, and he was like us sort of saying, "Oh, I agree. Oh, wait. Wait a second. What am I reading?" Look, I also think if okay, longtermism is a philosophy, or any of these other TESCREALs you want to pull - Never pull someone's TESCREAL, okay. Jason Bradford It might bite you. Rob Dietz But if you want to talk about philosophy, try to find a philosophy, some philosophical ideas, that can help you be present and help you be a good steward of the places you inhabit. You know, I like going old school with things like stoicism, you know, like letting go the things you can't control. I like Taoism. You know, there's a lot of go with the flow kind of ideas in there. Asher Miller Can I go with the Big Lebowskisms? Rob Dietz Perfect. Exactly. The dude abides. We can all abide. I also, on a more serious note, I think that Robin Wall Kimmerer, I think that we brought up her book, "Braiding Sweetgrass" before, and I know some of our listeners are fans of that book. What she does, it's amazing as she combines ecology, ecological science, with Indigenous philosophy. And it's a really wonderful mix, in a way, to think about the world. And as I think your goldfish example, kind of be present, where you are. Jason Bradford To be part of this world. This world and you are the same. We're one. That kind of stuff, And yeah, no, that's a beautiful book. Asher Miller And you know, honestly, I mean, we didn't get into this too much, but the human centeredness of longtermism and Effective Altruism and all the stuff - Doing the opposite of that is actually recognizing the more than human. And if we're not discounting the future lives of seven generations, let's say, of humans in front of us, what about all the other species that we share this planet with? And we've talked about this before, too, but another do the opposite is the precautionary principle rather than the double down doctrine that were these guys are pushing and were pushing on so many fronts right now. Everything seems to be fucking double down. Jason Bradford I know. I remember when I was reading West Jackson and Bob Jensen's book, they were explaining a conversation they had with each other over the phone. And Wes was like, "Bob, why isn't all this just enough? Just what we have. Why can't it just be enough?" And you wrote a book called, "Enough is Enough." Rob Dietz Yeah. My book was called, "Enough is Enough." Their book is called, "An Inconvenient Apocalypse." I bet they're selling a lot better than I am. Jason Bradford But I think that's true. I mean, can you just have a normal decent life and just consider the beauty of the world enough for you? I hope so. Rob Dietz I don't think so. I think that's a stupid idea. We instead have started a new organization. Jason Bradford Oh, we have? Rob Dietz Yeah, it's gonna counteract the Centre for Effective Altruism. Jason Bradford How are you gonna spell it? Rob Dietz I don't know. I think we gotta go "-er" since we're here in America. We're not gonna go "-re" Asher Miller Yeah, we're American. You gotta spell it the right way, man. Rob Dietz And yeah, this center is going to be the opposite of the Centre for Effective Altruism, right? It's gonna be called the Center for Incompetent Hoarding. So, you know, listeners find it online, donate as much money as you can. It doesn't matter what you do to get that money. Asher Miller Just don't do crypto. We can't take crypto. Asher Miller Well, thanks for listening. If you made it this far, then maybe you actually liked the show. Rob Dietz Yeah, and maybe you even consider yourself a real inhabitant of Crazy Town. someone like us who we affectionately call a Crazy Townie. Jason Bradford If that's the case, then there's one very simple thing you can do to help us out. Share the podcast, or even just this episode. Asher Miller Yeah, text three people you know who you think will get a kick out of hearing from us bozos. Rob Dietz Or if you want to go away old school, then tell them about the podcast face to face. Jason Bradford Please for the love of God. If enough people listen to this podcast, maybe one day we can all escape from Crazy Town. We're just asking for three people, a little bit of sharing, we can do this. Jason Bradford If you are the effectively altruistic parent of a young human, one of your greatest potential impacts is making sure your offspring make as much money as possible. At temps for future trillions, we will place your child with one of our special clients, all of whom are in constant need of discreet services and are willing to pay unbelievable sums. With just one or two summer jobs with our agency placed with either a drug lord, human trafficker, or weapons dealer. Your kid will have a substantial endowment available for whatever lucrative business they can think of after they drop out of college. They can then leverage their wealth in the service of securing the wondrous eternal life of infinitely happy post-human sentient beings. Temps for Future Trillions, effectively justifying sociopathy today for a universe of transhuman colonization tomorrow.