Is there an imminent danger that artificial intelligence will leap-frog human intelligence, go rogue, and either eliminate or enslave the human race?

You won’t find an answer to this question in an expert consensus, because there is none.

Consider the contrasting views of Geoffrey Hinton and Yann LeCun. When they and their colleague Yoshua Bengio were awarded the 2018 Turing Prize, the three were widely praised as the “godfathers of AI.”

“The techniques the trio developed in the 1990s and 2000s,” James Vincent wrote, “enabled huge breakthroughs in tasks like computer vision and speech recognition. Their work underpins the current proliferation of AI technologies ….”1

Yet Hinton and LeCun don’t see eye to eye on some key issues.

Hinton made news in the spring of 2023 with his highly-publicized resignation from Google. He stepped away from the company because he had become convinced AI has become an existential threat to humanity, and he felt the need to speak out freely about this danger.

In Hinton’s view, artificial intelligence is racing ahead of human intelligence and that’s not good news:

“There are very few examples of a more intelligent thing being controlled by a less intelligent thing.”2

LeCun now heads Meta’s AI division while also teaching New York University. He voices a more skeptical perspective on the threat from AI. As reported last month,

“[LeCun] believes the widespread fear that powerful A.I. models are dangerous is largely imaginary, because current A.I. technology is nowhere near human-level intelligence—not even cat-level intelligence.”3

As we dive deeper into these diverging judgements, we’ll look at a deceptively simple question: What is intelligence good for?

But here’s a spoiler alert: after reading scores of articles and books on AI over the past year, I’ve found I share the viewpoint of computer scientist Jaron Lanier.

In a New Yorker article last May Lanier wrote “The most pragmatic position is to think of A.I. as a tool, not a creature.”4 (emphasis mine) He repeated this formulation more recently:

“We usually prefer to treat A.I. systems as giant impenetrable continuities. Perhaps, to some degree, there’s a resistance to demystifying what we do because we want to approach it mystically. The usual terminology, starting with the phrase ‘artificial intelligence’ itself, is all about the idea that we are making new creatures instead of new tools.”5

This tool might be designed and operated badly or for nefarious purposes, Lanier says, perhaps even in ways that could cause our own and many other species’ extinction. Yet as a tool made and used by humans, the harm would best be attributed to humans and not to the tool.

Common senses

How might we compare different manifestations of intelligence? For many years Hinton thought electronic neural networks were a poor imitation of the human brain. But he told Will Douglas Heaven last year that he now thinks the AI neural networks have turned out to be better than human brains in important respects. While the largest AI neural networks are still small compared to human brains, they make better use of their connections:

“Our brains have 100 trillion connections,” says Hinton. “Large language models have up to half a trillion, a trillion at most. Yet GPT-4 knows hundreds of times more than any one person does. So maybe it’s actually got a much better learning algorithm than us.”6

Compared to people, Hinton says, the new Large Language Models learn new tasks extremely quickly.

LeCun argues that in spite of a relatively small number of neurons and connections in its brain, a cat is far smarter than the leading AI systems:

“A cat can remember, can understand the physical world, can plan complex actions, can do some level of reasoning—actually much better than the biggest LLMs. That tells you we are missing something conceptually big to get machines to be as intelligent as animals and humans.”7

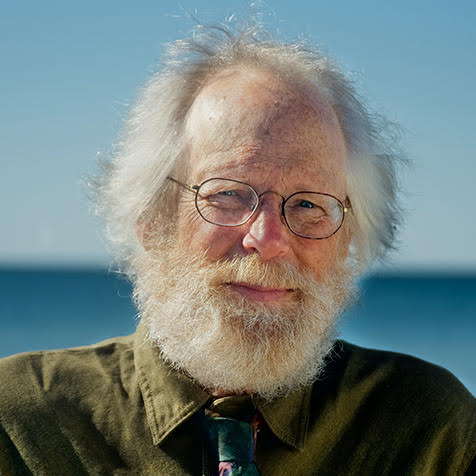

I’ve turned to a dear friend, who happens to be a cat, for further insight. When we go out for our walks together, each at one end of a leash, I notice how carefully Embers sniffs this bush, that plank, or a spot on the ground where another animal appears to have scratched. I notice how his ears turn and twitch in the wind, how he sniffs and listens before proceeding over a hill.

Embers knows hunger: he once disappeared for four months and came back emaciated and full of worms. He knows where mice might be found, and he knows it can be worth a long wait in tall grass, with ears carefully focused, until a determined pounce may yield a meal. He knows anger and fear: he has been ambushed by a larger cat, suffering injuries that took long painful weeks to heal. He knows that a strong wind, or the roar of crashing waves, make it impossible for him to determine if danger lurks just behind that next bush, and so he turns away in nervous agitation and heads back to a place where he feels safe.

Embers’ ability to “understand the physical world, plan complex actions, do some level of reasoning,” it seems to me, is deeply rooted in his experience of hunger, satiety, cold, warmth, fear, anger, love, comfort. His curiosity, too, is rooted in this sensory knowledge, as is his will – his deep determination to get out and explore his surroundings every morning and every evening. Both his will and his knowledge are rooted in biology. And given that we homo sapiens are no less biological, our own will and our own knowledge also have roots in biology.

For all their abilities to manipulate and reassemble fragments of information, however, I’ve come across nothing to indicate that any AI system will experience similar depths of sensory knowledge, and nothing to indicate they will develop wills or motivations of their own.

In other words, AI systems are not creatures, they are tools.

The elevation of abstraction

“Bodies matter to minds,” writes James Bridle. “The way we perceive and act in the world is shaped by the limbs, senses and contexts we possess and inhabit.”8

However, our human ability to conceive of things, not in their bodily connectedness but in their imagined separateness, has been the facet of intelligence at the center of much recent technological progress. Bridle writes:

“Historically, scientific progress has been measured by its ability to construct reductive frameworks for the classification of the natural world …. This perceived advancement of knowledge has involved a long process of abstraction and isolation, of cleaving one thing from another in a constant search for the atomic basis of everything ….”9

The ability to abstract, to separate into classifications, to simplify, to measure the effects of specific causes in isolation from other causes, has led to sweeping civilizational changes.

When electronic computing pioneers began to dream of “artificial intelligence”, Bridle says, they were thinking of intelligence primarily as “what humans do.” Even more narrowly, they were thinking of intelligence as something separated from and abstracted from bodies, as an imagined pure process of thought.

More narrowly still, the AI tools that have received most of the funding have been tools that are useful to corporate intelligence – the kinds that can be monetized, that can be made profitable, that can extract economic value for the benefit of corporations.

The resulting tools can be used in impressively useful ways – and as discussed in previous posts in this series, in dangerous and harmful ways. To the point of this post, however, we ask instead: Could artificially intelligent tools ever become creatures in their own right? And if they did, could they survive, thrive, take over the entire world, and conquer or eliminate biology-based creatures?

Last June, economist Blair Fix published a succinct takedown of the potential threat of a rogue artificial intelligence.

“Humans love to talk about ‘intelligence’,” Fix wrote, “because we’re convinced we possess more of it than any other species. And that may be true. But in evolutionary terms, it’s also irrelevant. You see, evolution does not care about ‘intelligence’. It cares about competence — the ability to survive and reproduce.”

Living creatures, he argued, must know how to acquire and digest food. From nematodes to homo sapiens we have the ability, quite beyond our conscious intelligence, to digest the food we need. But AI machines, for all their data-manipulating capacity, lack the most basic ability to care for themselves. In Fix’s words,

“Today’s machines may be ‘intelligent’, but they have none of the core competencies that make life robust. We design their metabolism (which is brittle) and we spoon feed them energy. Without our constant care and attention, these machines will do what all non-living matter does — wither against the forces of entropy.”10

Our “thinking machines”, like us, have their own bodily needs. Their needs, however, are vastly more complex and particular than ours are.

Humans, born as almost totally dependent creatures, can digest necessary nourishment from day one, and as we grow we rapidly develop the abilities to draw nourishment from a wide range of foods.

AI machines, on the other hand, are born and remain totally dependent on a single pure form of energy that only exists as produced through a sophisticated industrial complex: electricity, of a reliably steady and specific voltage and power. Learning to understand, manage and provide that sort of energy supply took almost all of human history to date.

Could the human-created AI tools learn to take over every step of their own vast global supply chains, thereby providing their own necessities of “life”, autonomously manufacturing more of their own kind, and escaping any dependence on human industry? Fix doesn’t think so:

“The gap between a savant program like ChatGPT and a robust, self-replicating machine is monumental. Let ChatGPT ‘loose’ in the wild and one outcome is guaranteed: the machine will go extinct.”

Some people have argued that today’s AI bots, or especially tomorrow’s bots, can quickly learn all they need to know to care and provide for themselves. After all, they can inhale the entire contents of the internet and, some say, can quickly learn the combined lessons of every scientific specialty.

But, as my elders used to tell me long before I became one of them, “book learning will only get you so far.” In the hypothetical case of an AI-bot striving for autonomy, digesting all the information on the internet would not grant assurance of survival.

It’s important, first, to recall that the science of robotics is nowhere near as developed as the science of AI. (See the previous post, Watching work, for a discussion of this issue.) Even if the AI-bot could both manipulate and understand all the science and engineering information needed to keep the artificial intelligence industrial complex running, that complex also requires a huge labour force of people with long experience in a vast array of physical skills.

“As consumers, we’re used to thinking of services like electricity, cellular networks, and online platforms as fully automated,” Timothy B. Lee wrote in Slate last year. “But they’re not. They’re extremely complex and have a large staff of people constantly fixing things as they break. If everyone at Google, Amazon, AT&T, and Verizon died, the internet would quickly grind to a halt—and so would any superintelligent A.I. connected to it.”11

In order to rapidly dispense with the need for a human labour force, a rogue cohort of AI-bots would need a sudden quantum leap in robotics. The AI-bots would need to be able to manipulate every type of data, but also every type of physical object. Lee summarizes the obstacles:

“Today there are far fewer industrial robots in the world than human workers, and the vast majority of them are special-purpose robots designed to do a specific job at a specific factory. There are few if any robots with the agility and manual dexterity to fix overhead power lines or underground fiber-optic cables, drive delivery trucks, replace failing servers, and so forth. Robots also need human beings to repair them when they break, so without people the robots would eventually stop functioning too.”

The information available on the internet, vast as it is, has a lot of holes. How many companies have thoroughly documented all of their institutional knowledge, such that an AI-bot could simply inhale all the knowledge essential to each company’s functions? To dispense with the human labour force, the AI-bot would need such documentation for every company that occupies every significant niche in the artificial intelligence industrial complex.

It seems clear, then, that a hypothetical AI overlord could not afford to get rid of a human work force, certainly not in a short time frame. And unless it could dispense with that labour force very soon, it would also need farmers, food distributors, caregivers, parents to raise and teachers to educate the next generation of workers – in short, it would need human society writ large.

But could it take full control of this global workforce and society by some combination of guile or force?

Lee doesn’t think so.

“Human beings are social creatures,” he writes. “We trust longtime friends more than strangers, and we are more likely to trust people we perceive as similar to ourselves. In-person conversations tend to be more persuasive than phone calls or emails. A superintelligent A.I. would have no friends or family and would be incapable of having an in-person conversation with anybody.”

It’s easy to imagine a rogue AI tricking some people some of the time, just as AI-enhanced extortion scams can fool many people into handing over money or passwords. But a would-be AI overlord would need to manipulate and control all of the people involved in keeping the industrial supply chain operating smoothly, regardless of the myriad possibilities for sabotage.

Tools and their dangerous users

A frequently discussed scenario is that AI could speed up the development of new and more lethal chemical poisons, new and more lethal microbes, and new, more lethal, and remotely-targeted munitions. All of these scenarios are plausible. And all of these scenarios, to the extent that they come true, will represent further increments in our already advanced capacities to threaten all life and to risk human extinction.

At the beginning of the computer age, after all, humans invented and then constructed enough nuclear weapons to wipe out all human life. Decades ago, we started producing new lethal chemicals on a massive scale, and spreading them with abandon throughout the global ecosystem. We have only a sketchy understanding of how all these chemicals interact with existing life forms, or with new life forms we may spawn through genetic engineering.

There are already many examples of how effective AI can be as a tool for disinformation campaigns. This is a further increment in the progression of new tools which were quickly put to use for disinformation. From the dawn of writing, to the development of low-cost printed materials, to the early days of broadcast media, each technological extension of our intelligence has been used to fan genocidal flames of fear and hatred.

We are already living with, and possibly dying with, the results of a decades-long, devastatingly successful disinformation project, the well-funded campaign by fossil fuel corporations to confuse people about the climate impacts of their own lucrative products.

AI is likely to introduce new wrinkles to all these dangerous trends. But with or without AI, we have the proven capacity to ruin our own world.

And if we drive ourselves to extinction, the AI-bots we have created will also die, as soon as the power lines break and the batteries run down.

Notes

1 James Vincent, “‘Godfathers of AI’ honored with Turing Award, the Nobel Prize of computing,” The Verge, 27 March 2019.

2 As quoted by Timothy B. Lee in “Artificial Intelligence Is Not Going to Kill Us All,” Slate, 9 May 2023.

3 Sissi Cao, “Meta’s A.I. Chief Yann LeCun Explains Why a House Cat Is Smarter Than The Best A.I.,” Observer, 15 February 2024.

4 Jaron Lanier, “There is No A.I.,” New Yorker, 20 April 2023.

5 Jaron Lanier, “How to Picture A.I.,” New Yorker, 1 March 2024.

6 Quoted in “Geoffrey Hinton tells us why he’s now scared of the tech he helped build,” by Will Douglas Heaven, MIT Technology Review, 2 May 2023.

7 Quoted in “Meta’s A.I. Chief Yann LeCun Explains Why a House Cat Is Smarter Than The Best A.I.,” by Sissi Cao, Observer, 15 February 2024.

8 James Bridle, Ways of Being: Animals, Plants, Machines: The Search for a Planetary Intelligence, Picador MacMillan, 2022; page 38.

9 Bridle, Ways of Being, page 100.

10 Blair Fix, “No, AI Does Not Pose an Existential Risk to Humanity,” Economics From the Top Down, 10 June 2023.

11 Timothy B. Lee, “Artificial Intelligence Is Not Going to Kill Us All,” Slate, 2 May 2023.