The rapid rise of social media over the past two decades has brought with it a surge in misinformation.

Online debates on topics such as vaccinations, presidential elections (pdf) and the coronavirus pandemic are often as vociferous as they are laced with misleading information.

Perhaps more than any other topic, climate change has been subject to the organised spread of spurious information. This circulates online and frequently ends up being discussed in established media or by people in the public eye.

But what is climate change misinformation? Who is involved? How does it spread and why does it matter?

In a new paper, published in WIREs Climate Change, we explore the actors behind online misinformation and why social networks are such fertile ground for misinformation to spread.

What is climate change misinformation?

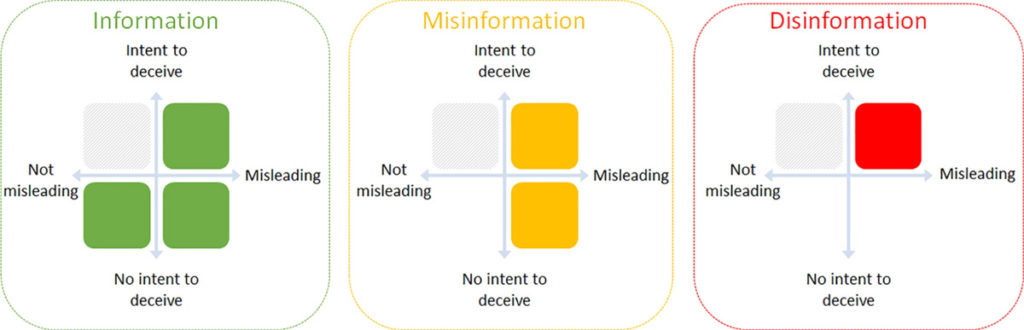

We define misinformation as “misleading information that is created and spread, regardless of whether there is intent to deceive”. It differs in a subtle, but important, way from “disinformation”, which is “misleading information that is created and spread with intent to deceive”.

Hierarchy of information (green), misinformation (yellow) and disinformation (red). Credit: Treen et al. (2020)

In the context of climate change research, misinformation may be seen in the types of behaviour and information which cast doubt on well-supported theories, or in those which attempt to discredit climate science.

These may be more commonly described as climate “scepticism”, “contrarianism” or “denialism”.

In a similar way, climate alarmism may also be construed as misinformation, as recent online debates have discussed. This includes making exaggerated claims about climate change that are not supported by the scientific literature. There is a negligible amount of literature about climate alarmism compared to climate scepticism, suggesting it is significantly less prevalent. As such, the focus for this article is on climate scepticism.

Who is involved?

Our review of the scientific literature suggests there are several different groups of actors involved in funding, creating and spreading climate misinformation.

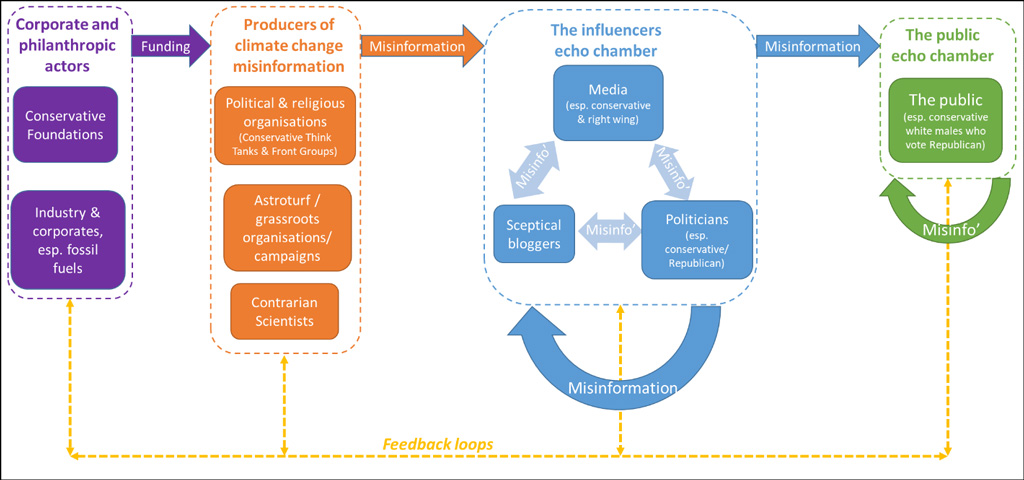

A schematic illustration of the climate change misinformation network. It shows the actors (purple) and producers (orange), as well as the echo chambers among influencers (blue) and the public (green). Credit: Treen et al. (2020)

Our findings, shown in the graphic above, highlight that the misinformation network begins with funding supplied by corporate and philanthropic actors (see purple sections) with a vested interest in climate change – particularly in fossil fuels.

This money goes to a range of groups involved in producing misinformation (orange). These groups – referred to variously as the “climate change denial machine” and “organised disinformation campaigns” – include political and religious organisations, contrarian scientists and online groups masquerading as grassroots organisations (known as “astroturfing”).

People in positions of power, such as the media, politicians and prominent bloggers, then repeat and amplify this information in an “influencers echo chamber” (blue), and from there it reaches a wider audience (green).

How does it spread?

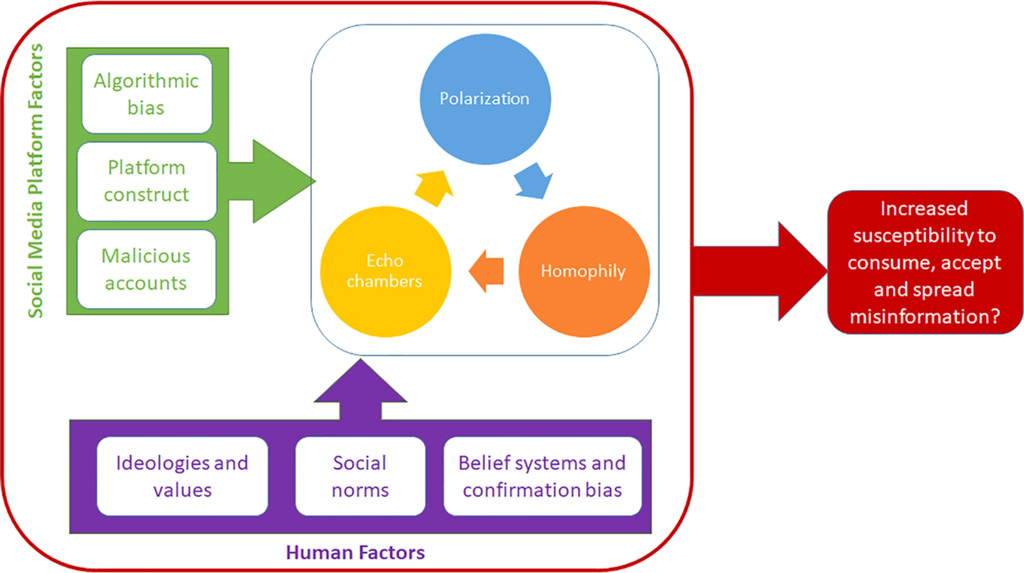

The spread of misinformation is intertwined with a number of online and offline social processes. One of these is “homophily” – the tendency for people to form social connections with those who are similar to themselves, as captured by the common saying “birds of a feather flock together”.

This behaviour is encouraged by social media platforms in the way new connections are recommended. Together with social norms and the observation that people tend to trust information from people in their social network, this can lead to “echo chambers” where information and misinformation echoes around a particular group. In turn, this can lead to polarisation, where communities can form around sharply contrasting positions on an issue.

Another factor which can contribute to polarisation is the way online social networks promote content based on being engaging and aligned with your previous viewed material rather than on trustworthiness. This is known as “algorithmic bias” and amplifies the psychological finding that people tend to prefer to consume information that matches their belief systems – known as “confirmation bias”. Social media platforms are also susceptible to the existence of malicious accounts which may produce and manipulate misleading content.

As the graphic below shows, all these human (purple section) and platform (green) factors come together in a melting pot on social media to potentially increase the susceptibility of social media users to spread, consume and accept misinformation.

A schematic illustration of the climate change misinformation network. It shows the actors (purple) and producers (orange), as well as the echo chambers among influencers (blue) and the public (green). Credit: Treen et al. (2020)

These factors are all present in climate change debate. Research using social network analysis suggests that a strong homophily effect occurs between polarised groups of social media users on opposing sides of the climate debate, and also finds evidence of echo chambers. In addition, people’s attitudes to climate change have been found to be strongly correlated to their ideology, values and social norms.

Why does it matter?

A key strategy used by the actors that spread climate change misinformation is to create doubt in people’s minds, leading to what has been described as a “paralysing fog of doubt around climate change”. There are three main themes: doubt about the reality of climate change; doubt about the urgency; and doubt about the credentials of climate scientists.

Research has suggested that climate misinformation can, therefore, contribute to public confusion and political inaction, rejection of or reduced support for mitigation policies, as well as increased existing political polarisation.

Research into misinformation in other areas has found it can cause individuals to have emotional responses, such as panic, suspicion, fear, worry and anger, as well as highlight that these responses, in turn, may have an impact on decisions and actions taken. There are concerns about misinformation being a threat at a societal level, particularly for democracies.

Some take it a step further. For example, a 2017 study in the Journal of Applied Research in Memory and Cognition (pdf) highlights “more insidious and arguably more dangerous elements of misinformation”, such as causing people to stop believing in facts altogether, and to lose trust in governments, impacting the “overall intellectual well-being of a society.”

What can be done about it?

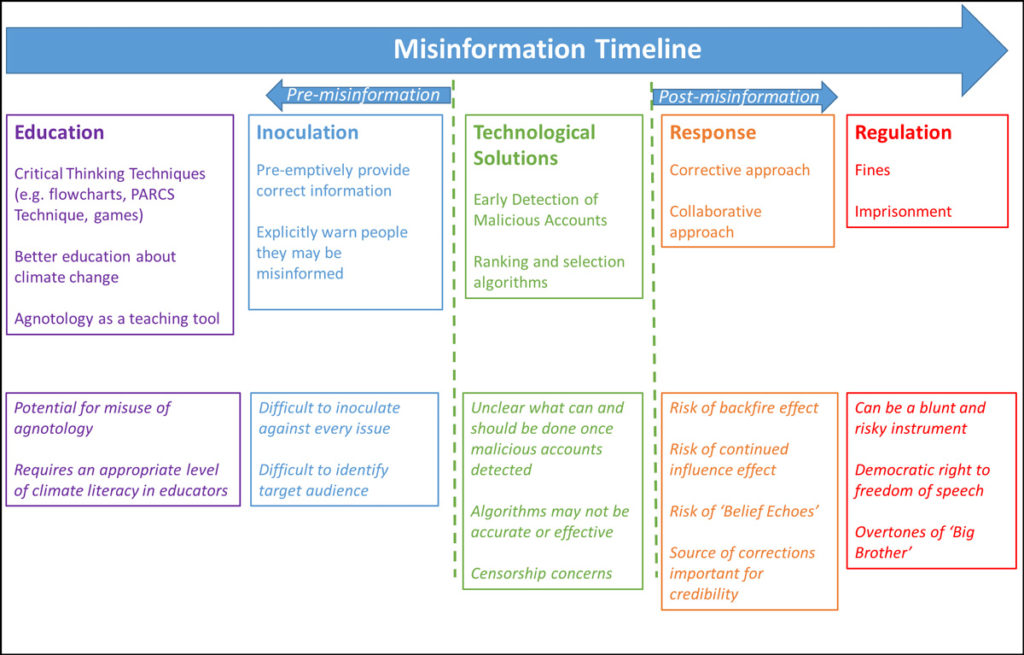

Scientific literature has put forward a range of ways to counteract misinformation. Summarised in the graphic below, these broadly fall into the categories of education (purple boxes), inoculation (blue), technological solutions (green), response (orange) and regulation (red).

A summary of the potential ways to counteract misinformation found in the literature, along with their criticisms and caveats. Credit: Treen et al. (2020)

Much of the literature looking more specifically at counteracting misinformation about climate change focuses on educational approaches: teaching critical-thinking techniques, better education about climate change, and using “agnotology” – the direct study of misinformation – as a teaching tool. While these all better equip people to identify misinformation, there is a risk of misuse of agnotology and a requirement for a certain level of climate literacy in educators.

Research on counteracting online misinformation also discusses “technocognition” or “socio-technological solutions”, which combine technological solutions with cognitive psychology theory.

These take the form of “inoculation” prior to misinformation being received. This can mean pre-emptively providing correct information or explicitly warning people they may be misinformed. Pure technological approaches include early detection of malicious accounts and using ranking and selection algorithms to reduce how much misinformation is circulating.

Then there are responses and regulation – bringing in a correction or a collaborative approach after the misinformation has been received, or even putting in place punishments, such as fines or imprisonment.

However, all these solutions have a number of caveats. For inoculation strategies, it is difficult to inoculate against every issue and to identify the target audience. Technological solutions bring their own concerns – for example, over censorship, whether the algorithms are accurate or effective, and there being no clear answer what can and should be done once malicious accounts are detected.

Corrective approaches come with their own risks. For example, there is the “backfire effect”, whereby individuals receiving the correcting information come to believe in their original position even more strongly.

Then there is the “continued influence effect”, whereby subsequent retractions do not eliminate people’s reliance on the original misinformation. And there are “belief echoes”, where exposure to misinformation continues to shape attitudes after it has been corrected, even when this correction is immediate. There is also the caveat that the source of corrections is important for credibility.

Regulation has been described as a “blunt and risky instrument” by a European Commission expert group. It is also potentially a threat to the democratic right to freedom of speech and has overtones of “Big Brother”.

In conclusion, it is important to recognise the role of misinformation in shaping our responses to climate change. Understanding the origins and spread of misinformation – especially through online networks – is imperative. Importantly, although there are several strategies to address misinformation, none of them is perfect. A combination of approaches will be needed to avoid misinformation continuing to disrupt public debate.

Treen, K. M. d’l., et al. (2020) Online misinformation about climate change, WIREs Climate Change, doi:10.1002/wcc.665

Teaser photo credit: Smart phone users wait at a train station. Credit: Unsplash.